Chapter 6 From data visualization to statistical modelling

Patterns in the data provide clues about relationship or covariation.Now that we know how to visualize the various relationships, we can proceed to learn more about how to formally test the relationship.

Statistical models are tools for extracting patterns out of data.

Statistics represent a common method of presenting information helping us to understand what the data are telling us.

Descriptive (or summary) statistics summarise the raw data and allow data users to interpret a dataset more easily.Descriptive statistics can describe the shape, centre and spread of a dataset.

Inferential statistics are used to infer conclusions about a population from a sample of that population. It includes estimation (An estimate is a value that is inferred for a population based on data collected from a sample of units from that population), and hypothesis testing.

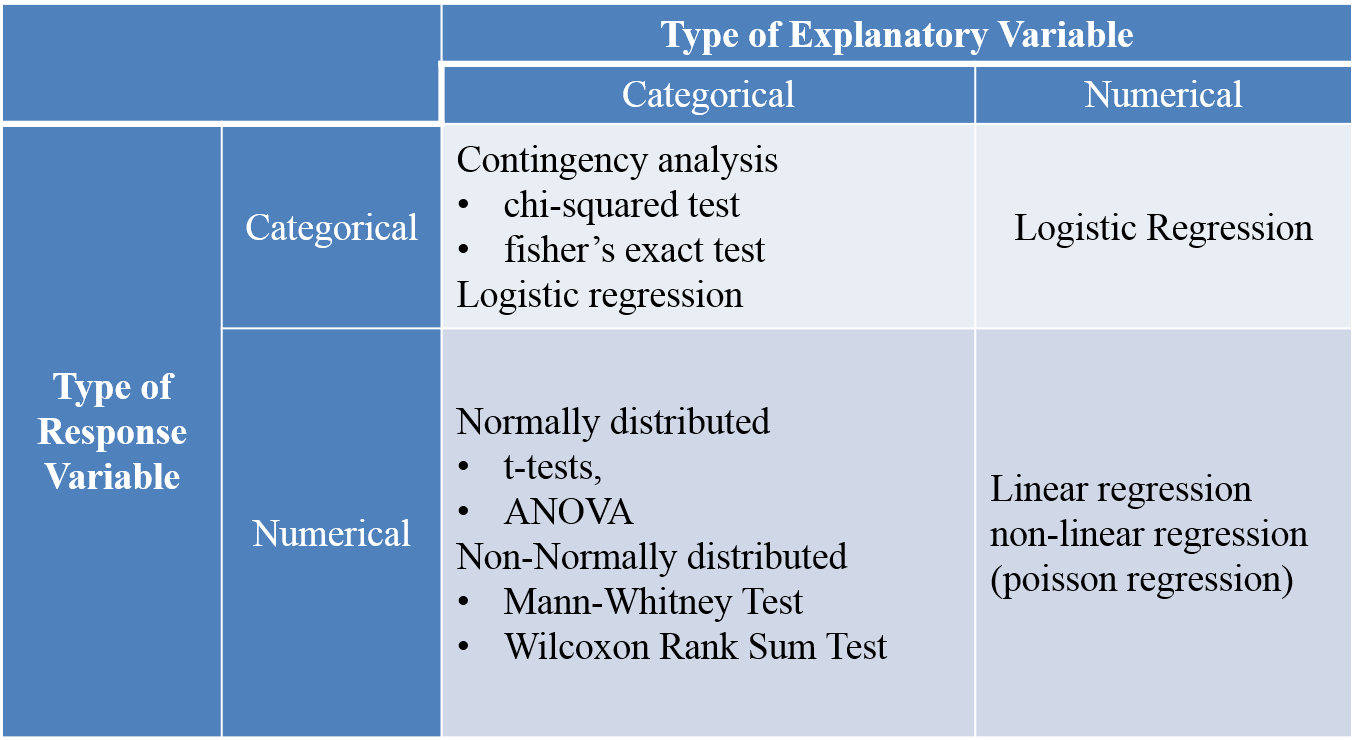

Figure 6.1: stats

6.1 Two continuous variables

6.1.1 Simple linear regression

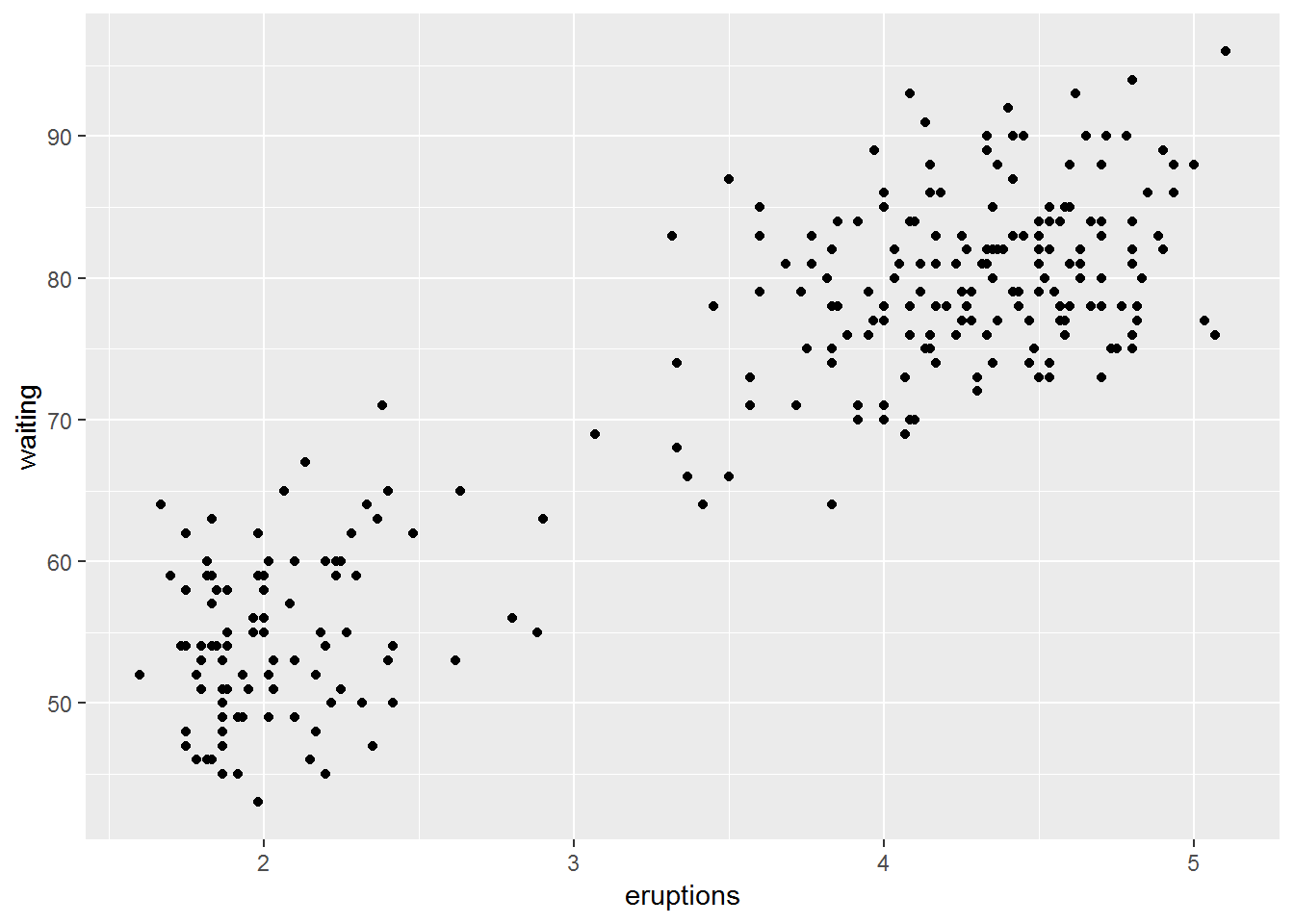

The techique we used here is called Simple linear regression, where there is one dependent variable (continuous) and one independent variable (continuous). When there are more than one independent variable (continuous), you need to look for something called Multiple linear regression.

head(faithful)## eruptions waiting

## 1 3.600 79

## 2 1.800 54

## 3 3.333 74

## 4 2.283 62

## 5 4.533 85

## 6 2.883 55ggplot(faithful)+

geom_point(aes(eruptions, waiting))

6.1.2 correlation vs. linear regression

Correlation and linear regression each explore the relationship between two quantitative variables. (Salvatore S. Mangiafico)

Correlation determines if one variable varies systematically as another variable changes. It does not specify that one variable is the dependent variable and the other is the independent variable. Often, it is useful to look at which variables are correlated to others in a data set, and it is especially useful to see which variables correlate to a particular variable of interest.

In contrast, linear regression specifies one variable as the independent variable and another as the dependent variable. The resultant model relates the variables with a linear relationship.

The tests associated with linear regression are parametric and assume normality, homoscedasticity, and independence of residuals, as well as a linear relationship between the two variables.

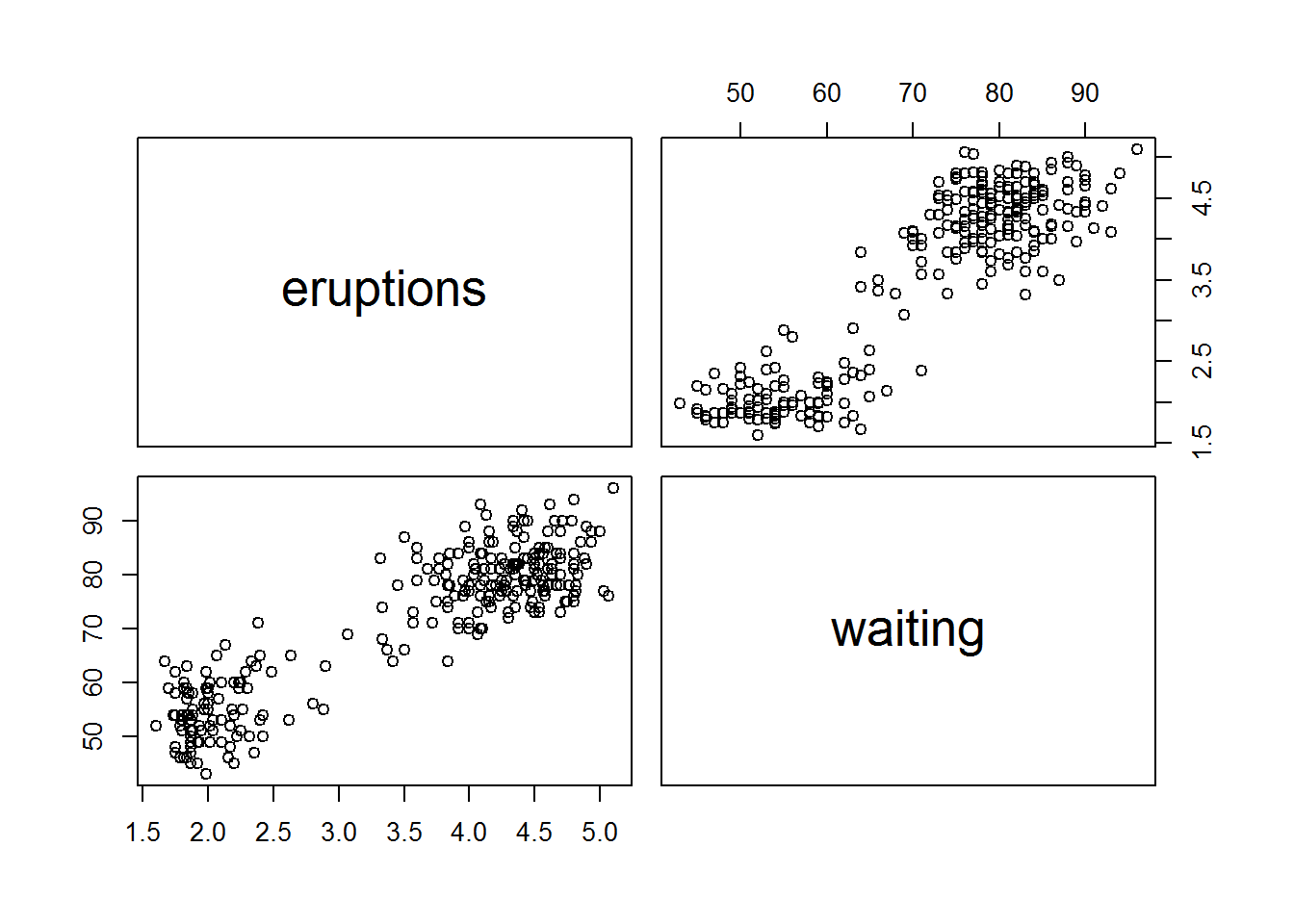

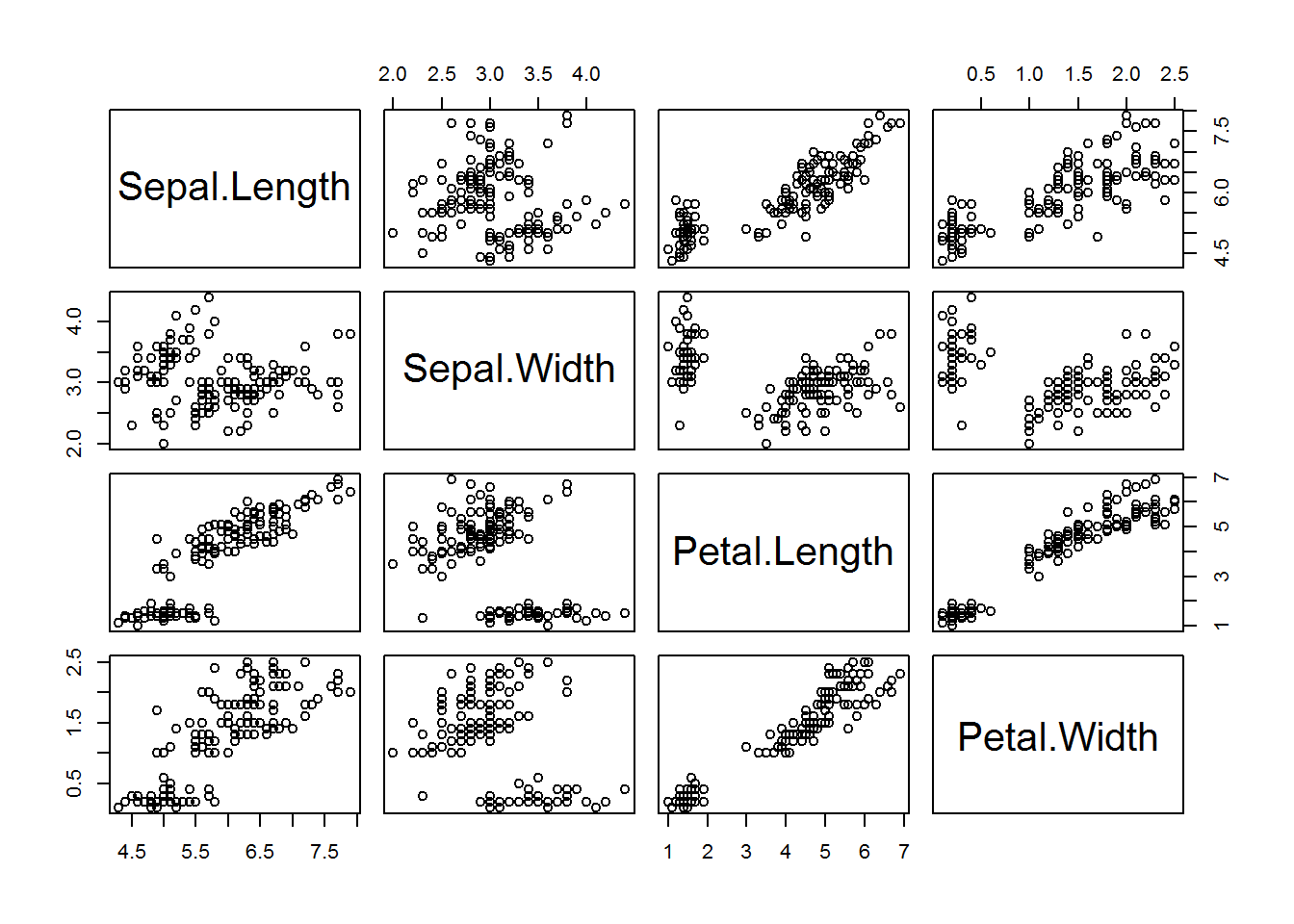

6.1.3 Correlation matrix

pairs(data=faithful,

~ eruptions + waiting)

pairs(data=iris,

~ Sepal.Length + Sepal.Width + Petal.Length +Petal.Width)

corr.test(faithful,

use = "pairwise",

method = "pearson",

adjust = "none")## Call:corr.test(x = faithful, use = "pairwise", method = "pearson",

## adjust = "none")

## Correlation matrix

## eruptions waiting

## eruptions 1.0 0.9

## waiting 0.9 1.0

## Sample Size

## [1] 272

## Probability values (Entries above the diagonal are adjusted for multiple tests.)

## eruptions waiting

## eruptions 0 0

## waiting 0 0

##

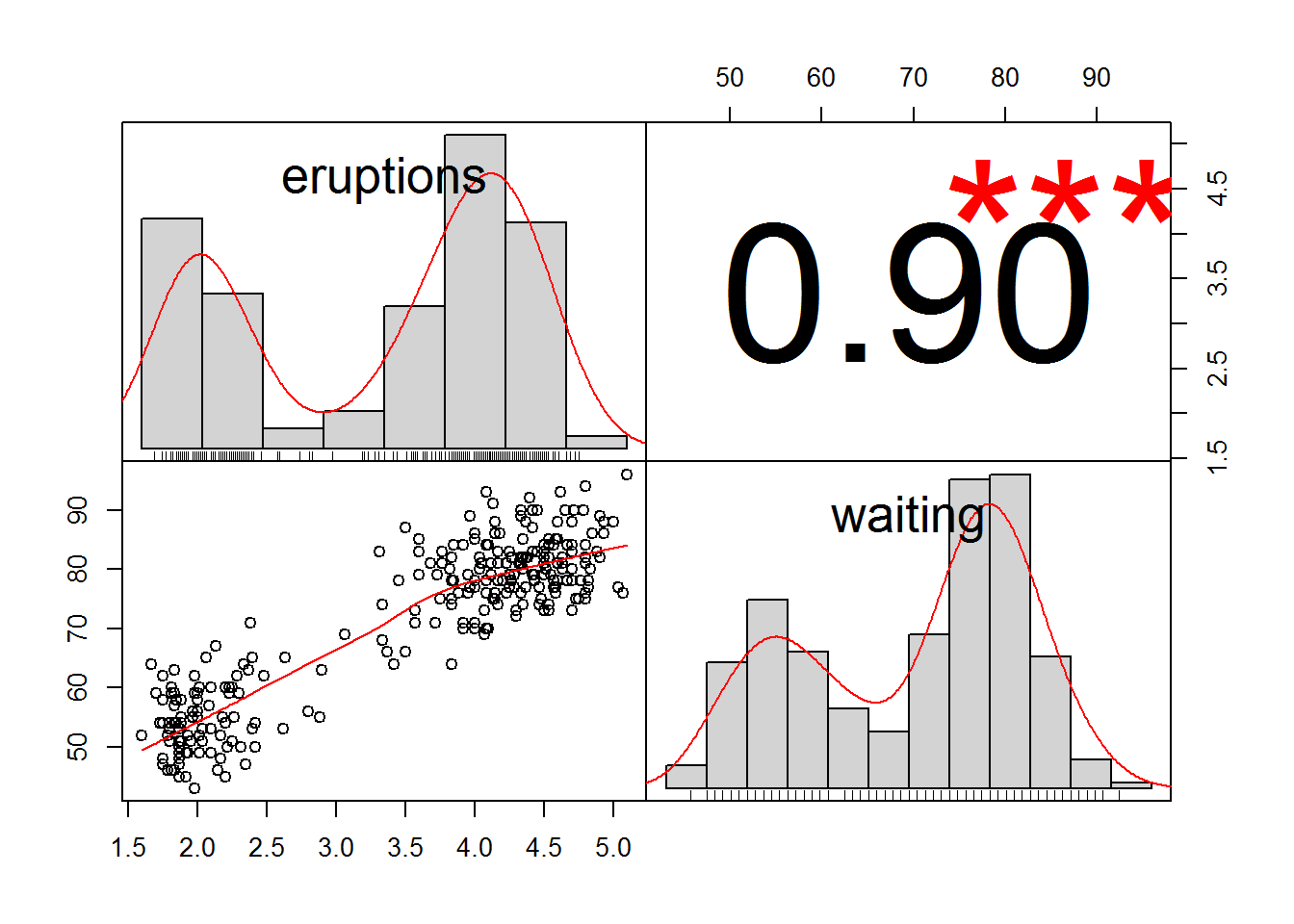

## To see confidence intervals of the correlations, print with the short=FALSE option6.1.4 Pearson, Spearman, and Kendall regression

# library(PerformanceAnalytics)

chart.Correlation(faithful,

method="pearson",

histogram=TRUE,

pch=16)

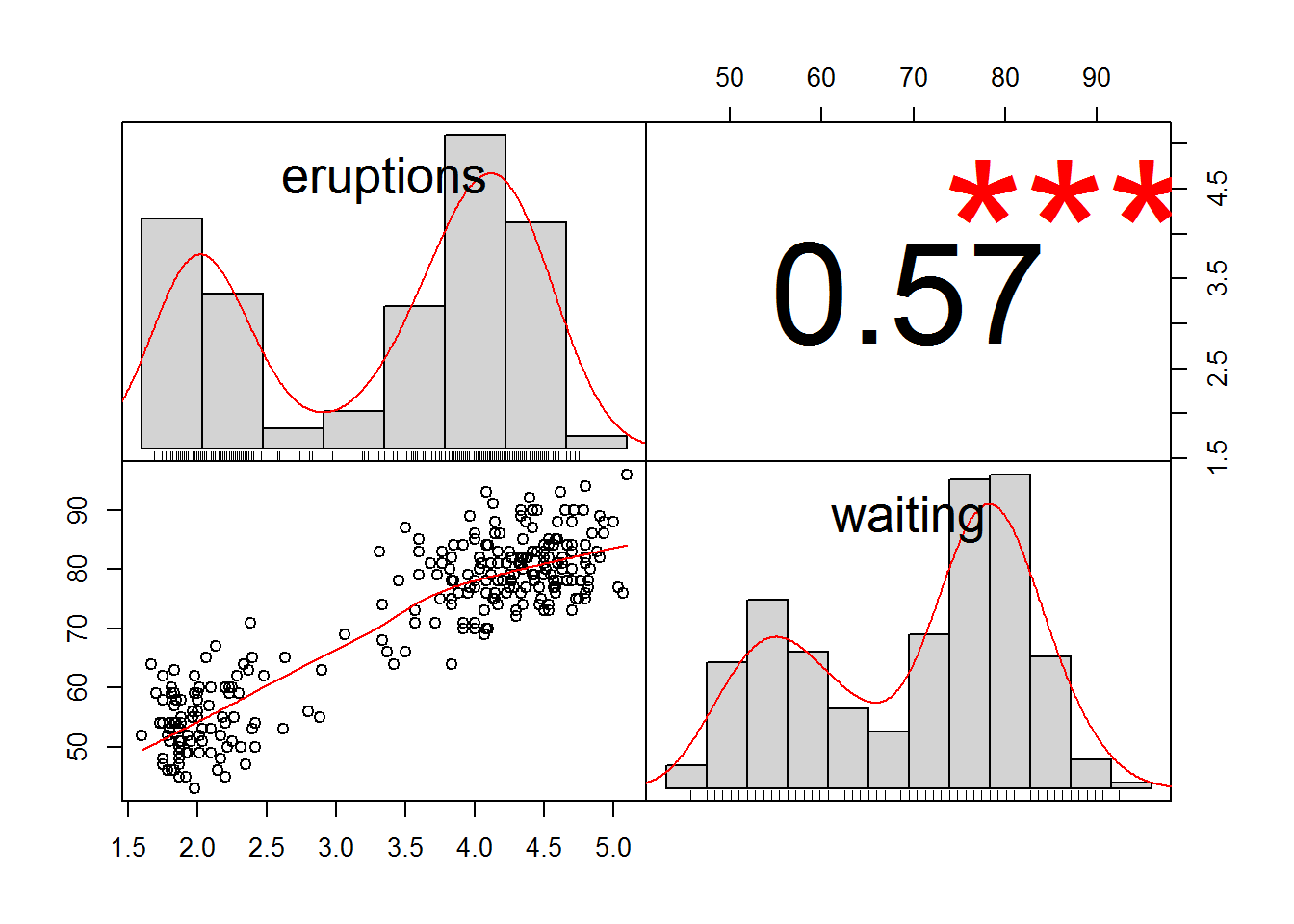

chart.Correlation(faithful,

method="kendall",

histogram=TRUE,

pch=16)

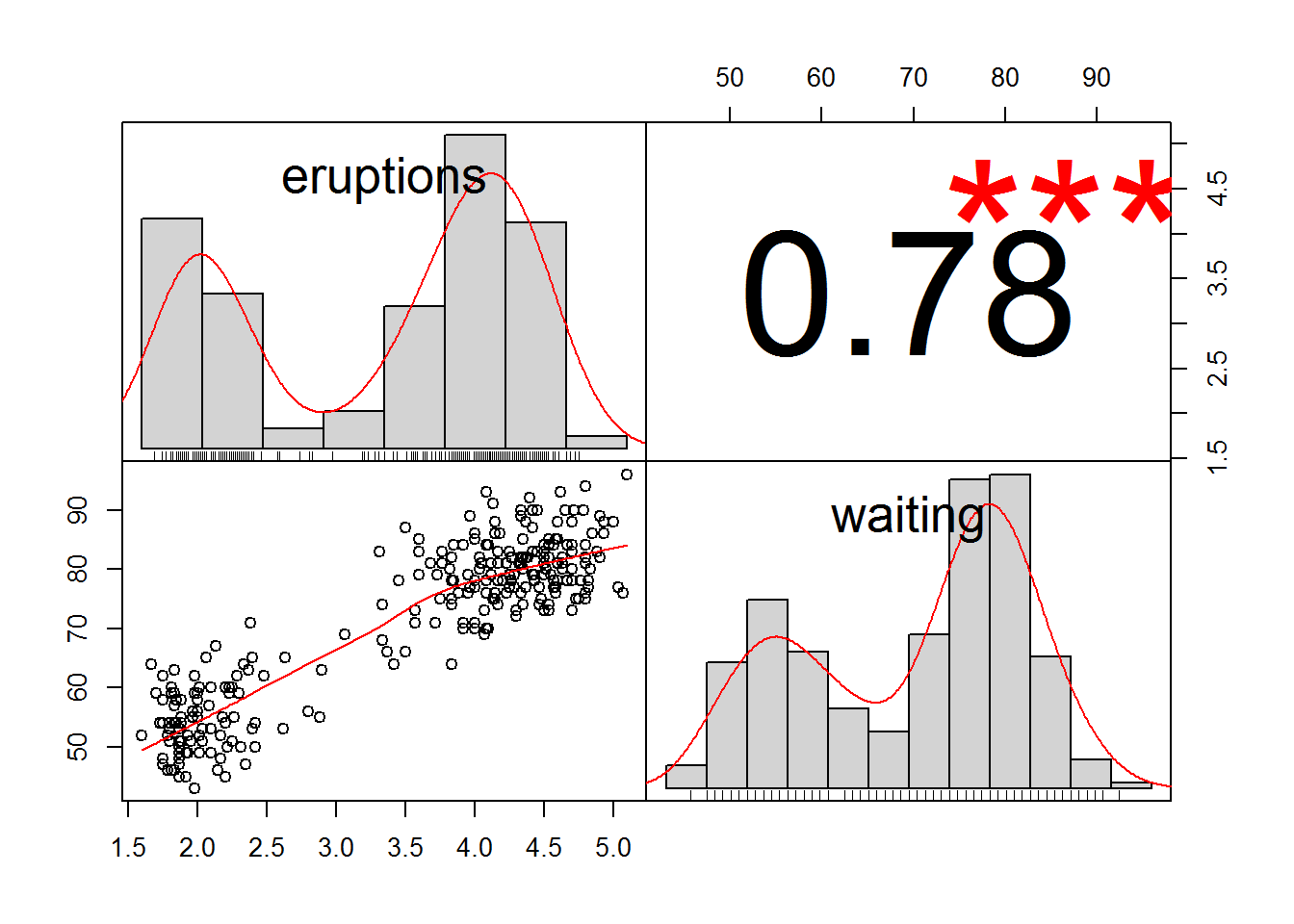

chart.Correlation(faithful,

method="spearman",

histogram=TRUE,

pch=16)## Warning in cor.test.default(as.numeric(x), as.numeric(y), method = method):

## Cannot compute exact p-value with ties

6.1.4.1 Effect size

The statistics r, rho, and tau are used as effect sizes for Pearson, Spearman, and Kendall regression, respectively. These statistics vary from –1 to 1, with 0 indicating no correlation, 1 indicating a perfect positive correlation, and –1 indicating a perfect negative correlation. Like other effect size statistics, these statistics are not affected by sample size.

Interpretation of effect sizes necessarily varies by discipline and the expectations of the experiment. They should not be considered universal. An interpretation of r is given by Cohen (1988). It is probably reasonable to use similar interpretations for rho and tau.

small: 0.10 – < 0.30

medium: 0.30 – < 0.50

large: ≥ 0.50

6.1.4.2 Pearson correlation

The test used for Pearson correlation is a parametric analysis that requires that the relationship between the variables is linear, and that the data be bivariate normal. Variables should be interval/ratio. The test is sensitive to outliers.

The correlation coefficient, r, can range from +1 to –1, with +1 being a perfect positive correlation and –1 being a perfect negative correlation. An r of 0 represents no correlation whatsoever. The hypothesis test determines if the r value is significantly different from 0.

cor.test( ~ eruptions + waiting,

data=faithful,

method = "pearson")##

## Pearson's product-moment correlation

##

## data: eruptions and waiting

## t = 34.089, df = 270, p-value < 2.2e-16

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.8756964 0.9210652

## sample estimates:

## cor

## 0.9008112# the results report the p-value for the hypothesis test as well as the r value, written as cor, 0.849.6.1.4.3 Kendall correlation

Kendall correlation is considered a nonparametric analysis. - It is a rank-based test that does not require assumptions about the distribution of the data.

- Variables can be interval/ratio or ordinal.

The correlation coefficient from the test is tau, which can range from +1 to –1, with +1 being a perfect positive correlation and –1 being a perfect negative correlation. A tau of 0 represents no correlation whatsoever. The hypothesis test determines if the tau value is significantly different from 0.

As a technical note, the cor.test function in R calculates tau-b, which handles ties in ranks well.

The test is relatively robust to outliers in the data. The test is sometimes cited for being reliable when there are small number of samples or when there are many ties in ranks.

cor.test( ~ eruptions + waiting,

data=faithful,

method = "kendall")##

## Kendall's rank correlation tau

##

## data: eruptions and waiting

## z = 13.902, p-value < 2.2e-16

## alternative hypothesis: true tau is not equal to 0

## sample estimates:

## tau

## 0.57476746.1.4.4 Spearman correlation

Spearman correlation is considered a nonparametric analysis.

- It is a rank-based test that does not require assumptions about the distribution of the data.

- Variables can be interval/ratio or ordinal.

The correlation coefficient from the test, rho, can range from +1 to –1, with +1 being a perfect positive correlation and –1 being a perfect negative correlation. A rho of 0 represents no correlation whatsoever. The hypothesis test determines if the rho value is significantly different from 0.

Spearman correlation is probably most often used with ordinal data. It tests for a monotonic relationship between the variables. It is relatively robust to outliers in the data.

cor.test( ~ eruptions + waiting,

data=faithful,

method = "spearman")## Warning in cor.test.default(x = c(3.6, 1.8, 3.333, 2.283, 4.533, 2.883, :

## Cannot compute exact p-value with ties##

## Spearman's rank correlation rho

##

## data: eruptions and waiting

## S = 744660, p-value < 2.2e-16

## alternative hypothesis: true rho is not equal to 0

## sample estimates:

## rho

## 0.77797216.1.5 Linear regression

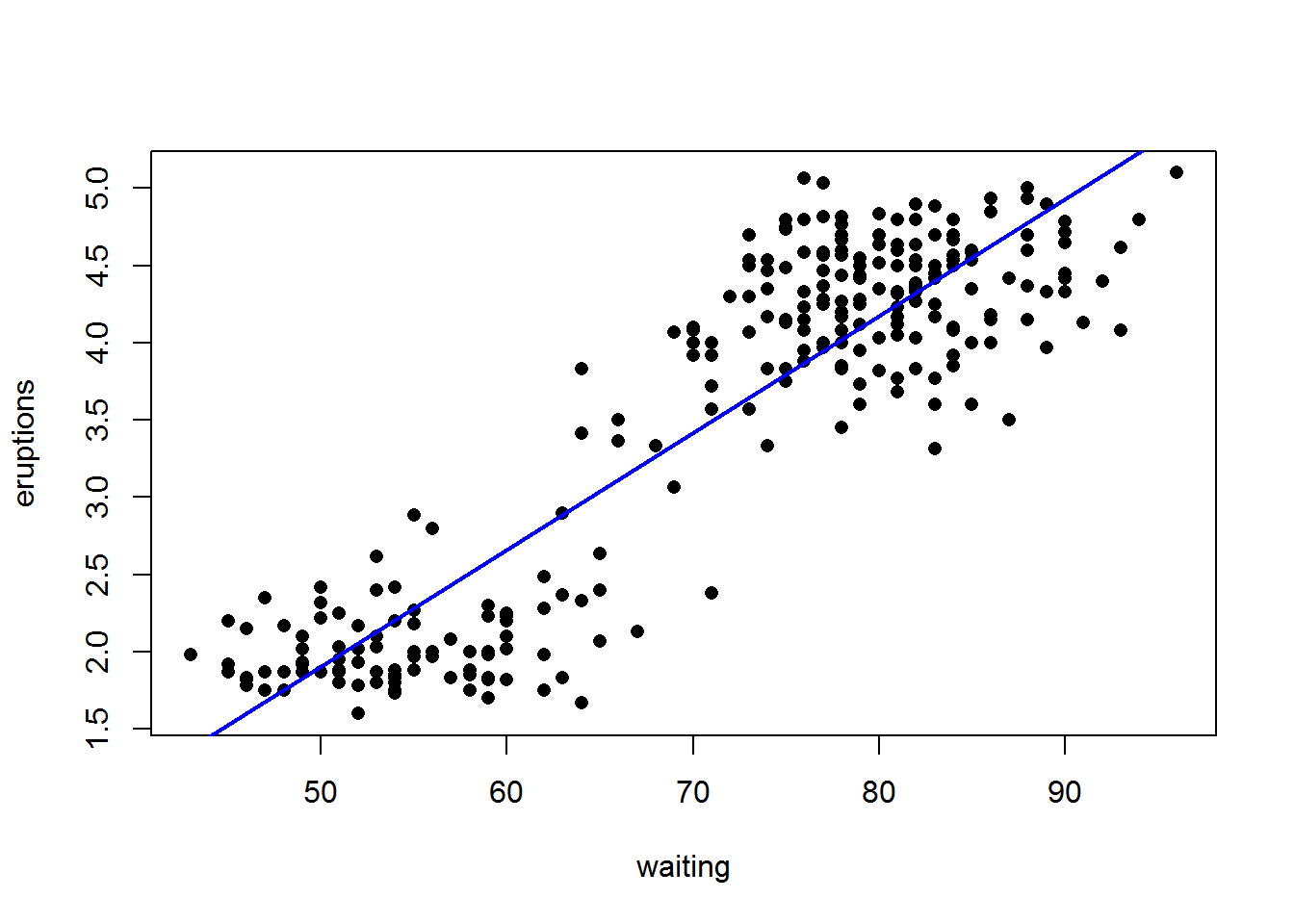

Linear regression is a very common approach to model the relationship between two interval/ratio variables. The outcome of linear regression includes estimating the intercept and the slope of the linear model.

Multiple, nominal, and ordinal independent variables

If there are multiple independent variables of interval/ratio type in the model, then linear regression expands to multiple regression.

If the independent variable were of nominal type, then the linear regression would become a one-way analysis of variance.

Handling independent variables of ordinal type can be complicated. Often they are treated as either nominal type or interval/ratio type, although there are drawbacks to each approach.

Assumptions

Linear regression assumes - a linear relationship between the two variables, - normality of the residuals, - independence of the residuals, - homoscedasticity of residuals.

Linear regression can be performed with the lm function, which was the same function we used for analysis of variance.

model = lm(eruptions ~ waiting,

data = faithful)

summary(model)##

## Call:

## lm(formula = eruptions ~ waiting, data = faithful)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.29917 -0.37689 0.03508 0.34909 1.19329

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -1.874016 0.160143 -11.70 <2e-16 ***

## waiting 0.075628 0.002219 34.09 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.4965 on 270 degrees of freedom

## Multiple R-squared: 0.8115, Adjusted R-squared: 0.8108

## F-statistic: 1162 on 1 and 270 DF, p-value: < 2.2e-16The summary function for lm model objects includes estimates for model parameters (intercept and slope), as well as an r-squared value for the model and p-value for the model.

- How to read the model?

The model produces a coefficient for the intercept (-1.87) and a coefficient for the slope (0.07);

Each coefficient comes with three other numbers: its standard error, a t-value, and a p-value. The p-value tells us whether the coefficient is significantly different from zero.

If the coefficient for a predictor is zero, there is no relation at all between the predictor and the dependent variable, in which case it is worthless as a predictor. In order to ascertain whether a coefficient is significantly different from zero, and hence potentially useful, a two-tailed t-test is carried out, using the t-value and the associated degrees of freedom.

The t-value itself is the value of the coefficient divided by its standard error. This standard error is a measure of how sure we are about the estimate of the coefficient. The smaller the standard error, the smaller the confidence interval around the estimate, the less likely it is that zero will be included in the acceptance region, and hence the smaller the probability that it might just as well be zero.

The residual standard error is a measure of how unsuccessful the model is; it gauges the variability in the dependent variable that we can’t handle through the predictor variables. The better a model is, the smaller its residual standard error will be.

The multiple R-squared equals 0.8115. This R-squared is the squared correlation coefficient, r2, which quantifies, on a scale from 0 to 1, the proportion of the variance that the model explains.

plot(eruptions ~ waiting,

data=faithful,

pch=16,

xlab = "waiting",

ylab = "eruptions")

abline(model,

col = "blue",

lwd = 2)

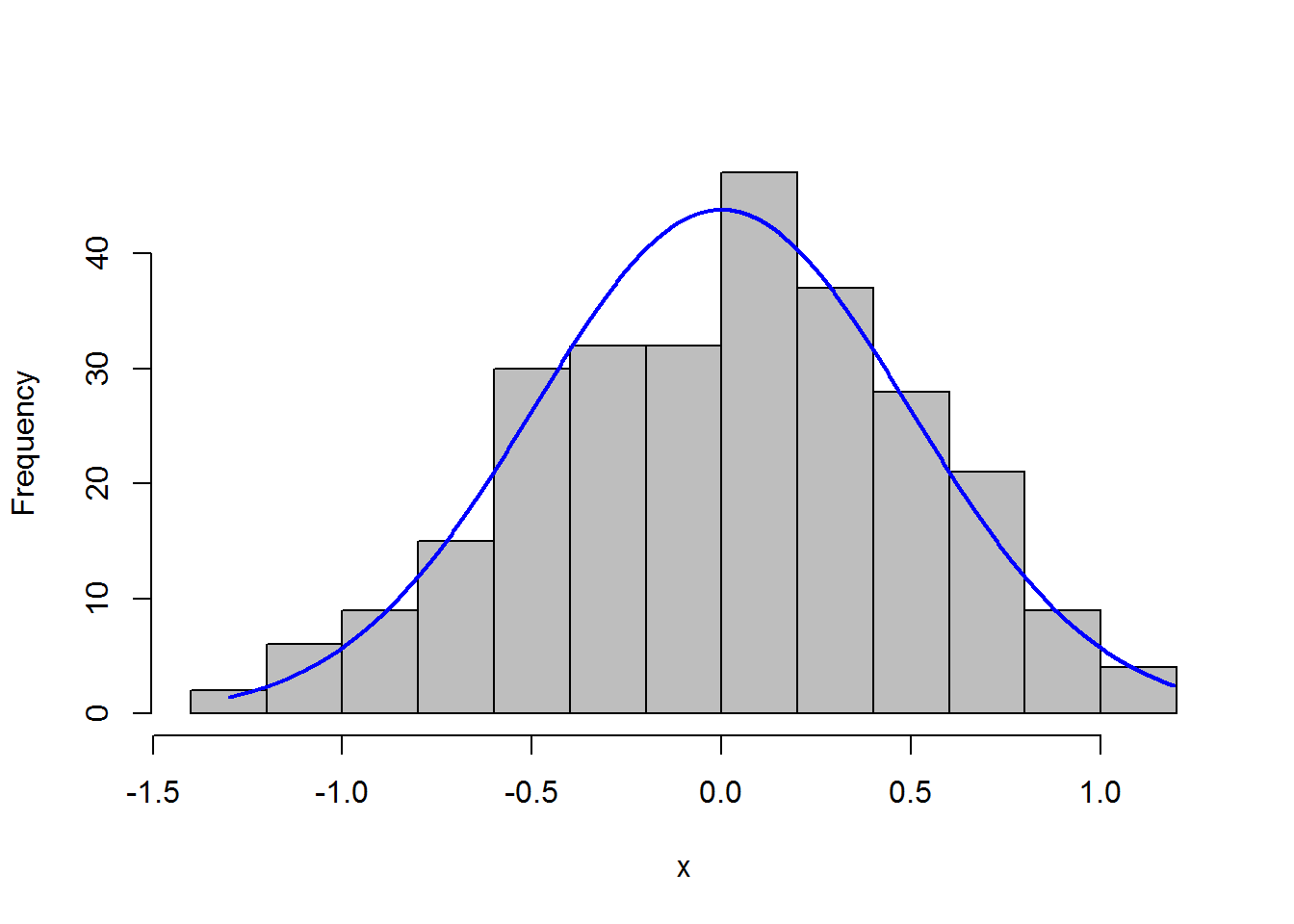

x = residuals(model)

#library(rcompanion)

plotNormalHistogram(x)

6.2 Categorical(independent) with continuous(dependent)

6.2.1 T-tests

T-tests are commonly used to compare the means of two samples or between one sample and a fixed value. In other words, the independent variable should be categorical and have two levels.

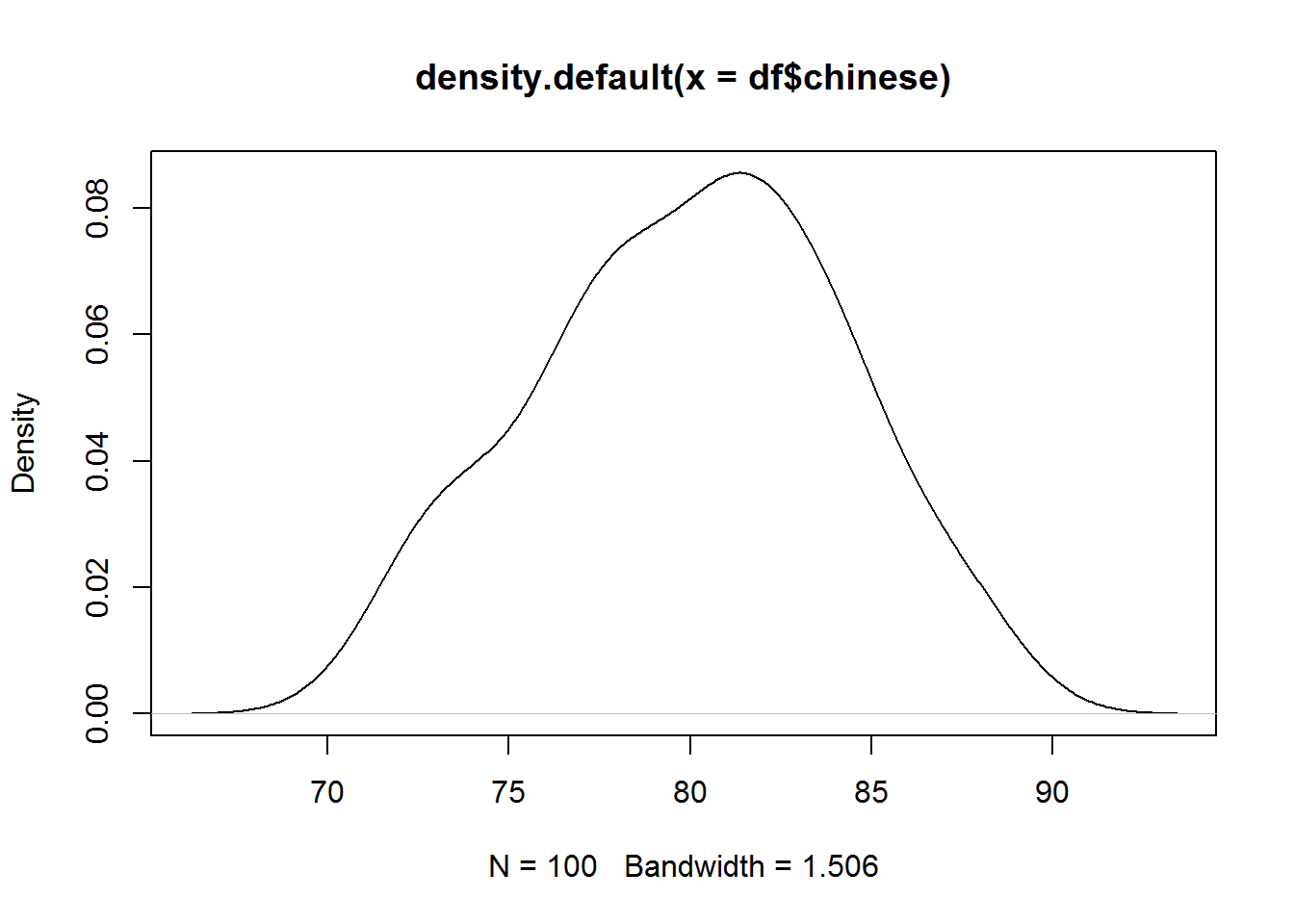

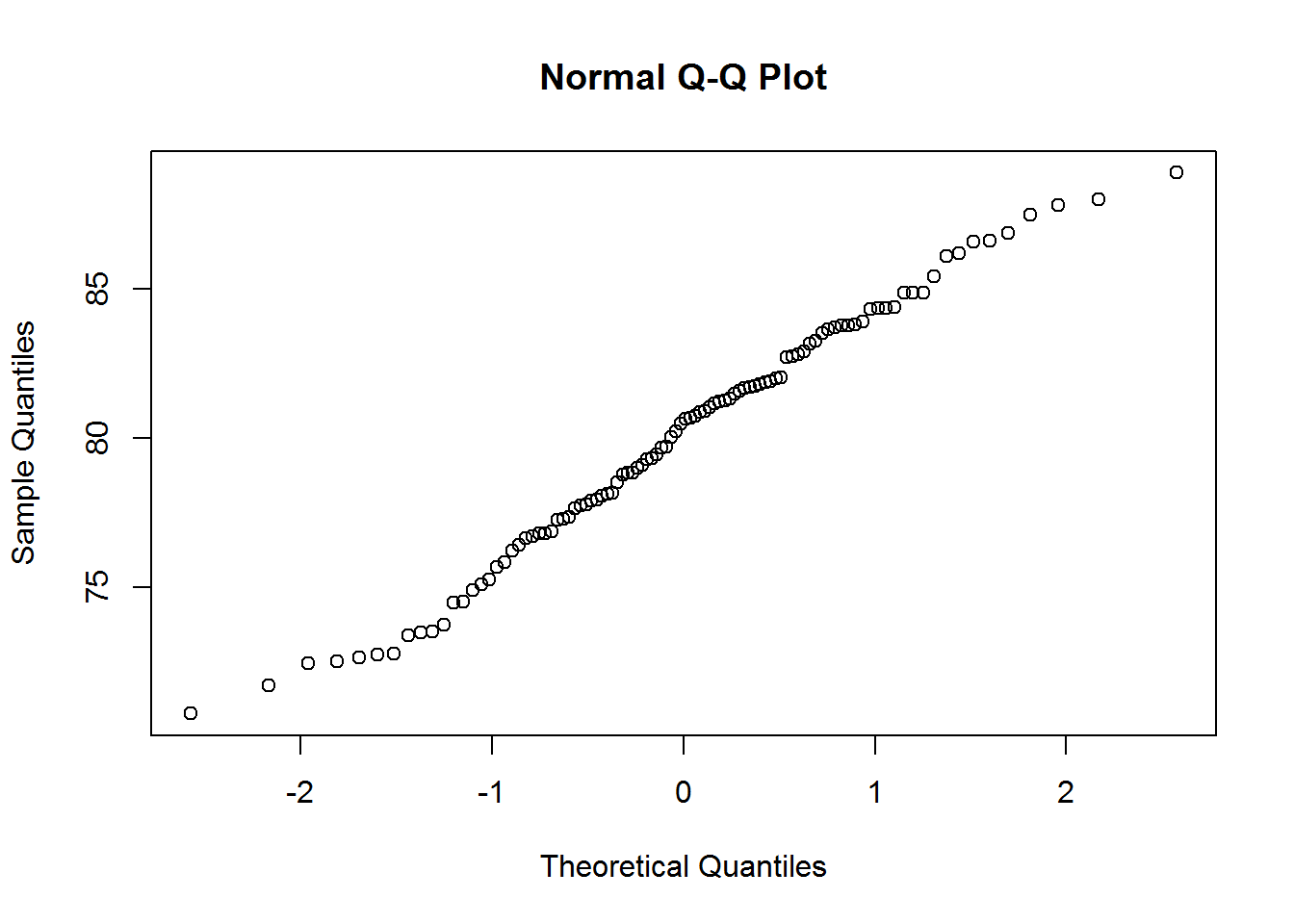

6.2.1.1 Requirements:

Observations between groups are independent.That is, not paired or repeated measures data

Data for each population are normally distributed.Moderate skewness is permissible if the data distribution is unimodal without outliers.

# distribution

plot(density(df$chinese))

qqnorm(df$chinese)

# formal tests

# null hypothesis: the distribution is normal

shapiro.test(df$chinese)##

## Shapiro-Wilk normality test

##

## data: df$chinese

## W = 0.98571, p-value = 0.3566ks.test(df$chinese, "pnorm", mean(df$chinese), sd(df$chinese))##

## One-sample Kolmogorov-Smirnov test

##

## data: df$chinese

## D = 0.059435, p-value = 0.8717

## alternative hypothesis: two-sided- For Student’s t-test, the two samples need to have the same variance. However, Welch’s t-test, which is used by default in R, does not assume equal variances.

#independent sample t-test---compare means

class1 = df[df$class == 1, ]$chinese

class2 = df[df$class == 2, ]$chinese

var.test(class1,class2)#compare variance##

## F test to compare two variances

##

## data: class1 and class2

## F = 1.6497, num df = 24, denom df = 24, p-value = 0.2273

## alternative hypothesis: true ratio of variances is not equal to 1

## 95 percent confidence interval:

## 0.7269864 3.7437034

## sample estimates:

## ratio of variances

## 1.6497346.2.1.2 One-sample t-test

#one sample t-test: one group and a fixed value

t.test(df$chinese, mu = 78)##

## One Sample t-test

##

## data: df$chinese

## t = 4.7923, df = 99, p-value = 5.804e-06

## alternative hypothesis: true mean is not equal to 78

## 95 percent confidence interval:

## 79.18028 80.84827

## sample estimates:

## mean of x

## 80.01428t.test(df$chinese, mu = 78, alternative = "greater")##

## One Sample t-test

##

## data: df$chinese

## t = 4.7923, df = 99, p-value = 2.902e-06

## alternative hypothesis: true mean is greater than 78

## 95 percent confidence interval:

## 79.31639 Inf

## sample estimates:

## mean of x

## 80.01428t.test(df$chinese, mu = 78, alternative = "less")##

## One Sample t-test

##

## data: df$chinese

## t = 4.7923, df = 99, p-value = 1

## alternative hypothesis: true mean is less than 78

## 95 percent confidence interval:

## -Inf 80.71216

## sample estimates:

## mean of x

## 80.01428wilcox.test(df$chinese, mu = 78)# skewed distributions##

## Wilcoxon signed rank test with continuity correction

##

## data: df$chinese

## V = 3771, p-value = 1.849e-05

## alternative hypothesis: true location is not equal to 786.2.1.3 Independent sample t-test

t.test(class1,class2)##

## Welch Two Sample t-test

##

## data: class1 and class2

## t = -0.63224, df = 45.278, p-value = 0.5304

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -3.196186 1.668777

## sample estimates:

## mean of x mean of y

## 79.47697 80.240676.2.1.4 Paired sample t-test

t.test(df$chinese, df$math, paired = T)##

## Paired t-test

##

## data: df$chinese and df$math

## t = 40.353, df = 99, p-value < 2.2e-16

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## 19.61140 21.63976

## sample estimates:

## mean of the differences

## 20.625586.2.1.5 wilcox.test

wilcox.test(class1,class2)#not normally distributed##

## Wilcoxon rank sum test

##

## data: class1 and class2

## W = 297, p-value = 0.773

## alternative hypothesis: true location shift is not equal to 0wilcox.test(df$chinese, df$math, paired = T)##

## Wilcoxon signed rank test with continuity correction

##

## data: df$chinese and df$math

## V = 5050, p-value < 2.2e-16

## alternative hypothesis: true location shift is not equal to 06.2.2 ANOVA

When the independent variable has more than two levels, we need to use ANOVA.

We can use avo function in R or build a linear model.

dif_class = aov (chinese ~ class, data = df)

summary(dif_class)## Df Sum Sq Mean Sq F value Pr(>F)

## class 3 34.5 11.51 0.644 0.588

## Residuals 96 1714.4 17.86How to report: There is a significant difference among different classes in terms of their Chinese scores, F (3, 96) = 0.87, p = .46.

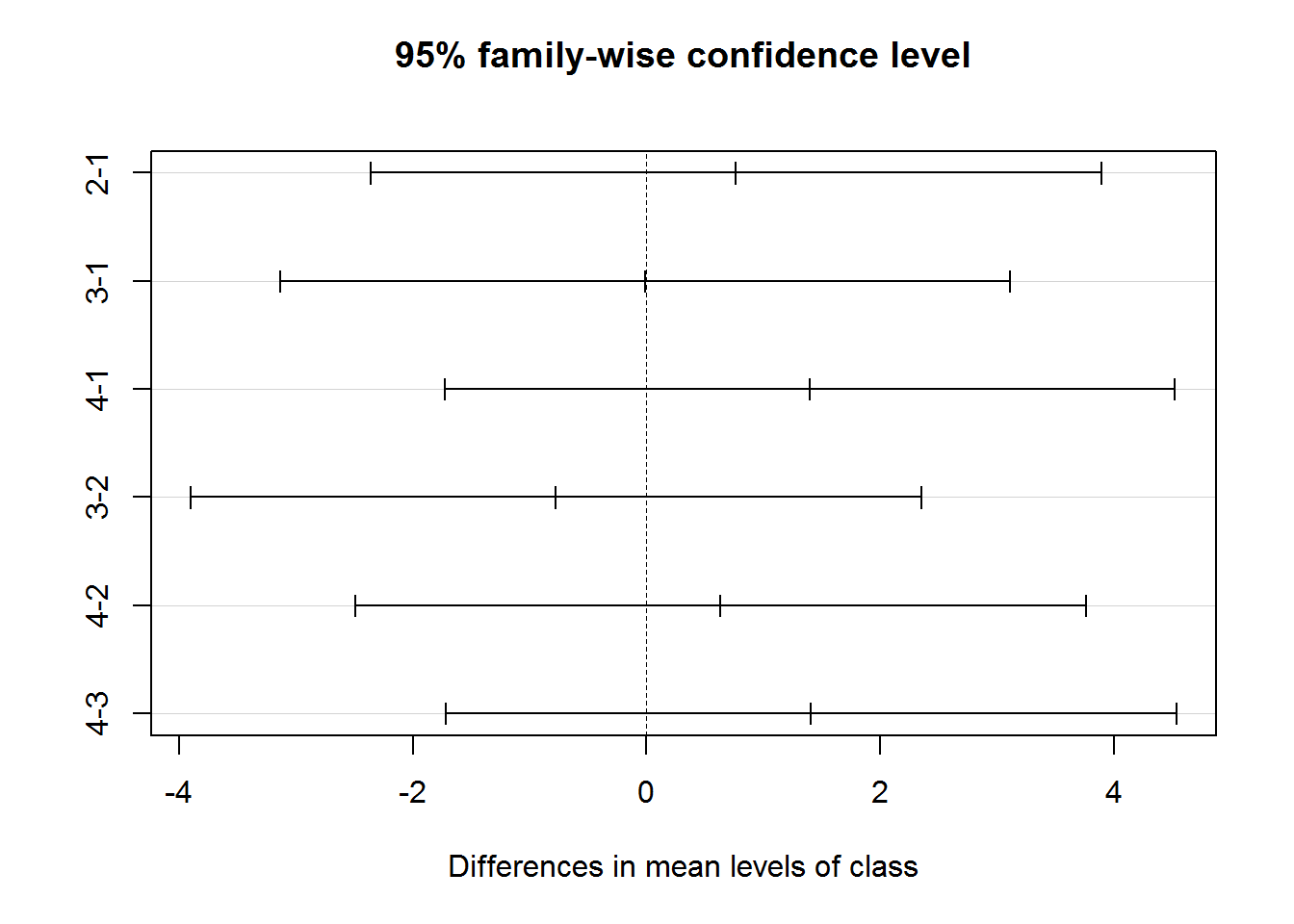

After we run the model, it is very likely that we need to conduct pairwised comparison. However, we cannot simple use multiple t-tests but we need to correct the alpha value.

There are different ways to do the correction:

- Bonferroni correction: a/n

- Tukey’s Honestly Significant difference: assuming the means for each level of the factor should be based on equal numbers of observation.

#how is the difference like?

TukeyHSD(dif_class)## Tukey multiple comparisons of means

## 95% family-wise confidence level

##

## Fit: aov(formula = chinese ~ class, data = df)

##

## $class

## diff lwr upr p adj

## 2-1 0.76370420 -2.361498 3.888907 0.9191350

## 3-1 -0.01161454 -3.136817 3.113588 0.9999997

## 4-1 1.39714388 -1.728058 4.522346 0.6478474

## 3-2 -0.77531874 -3.900521 2.349884 0.9157821

## 4-2 0.63343968 -2.491763 3.758642 0.9516030

## 4-3 1.40875842 -1.716444 4.533961 0.6417724# diff= the difference in the means

# lwr = the lower end points of the confidence interval

# p adj = adjusted p value

plot(TukeyHSD(dif_class))

In the general linear model approach, residuals are normally distributed;groups should have the same variance, or homoscedasticity.

Observations among groups are independent. That is, not paired or repeated measures data

Moderate deviation from normally-distributed residuals is permissible

#two-way ANOVA

m1 <- aov(chinese ~ sex + class, data = df)

summary(m1)## Df Sum Sq Mean Sq F value Pr(>F)

## sex 1 0.4 0.379 0.021 0.885

## class 3 35.6 11.869 0.658 0.580

## Residuals 95 1713.0 18.031m2 <- aov(chinese ~ sex * class, data = df)

summary(m2)## Df Sum Sq Mean Sq F value Pr(>F)

## sex 1 0.4 0.379 0.021 0.886

## class 3 35.6 11.869 0.643 0.589

## sex:class 3 14.5 4.838 0.262 0.853

## Residuals 92 1698.5 18.462m3 <- aov(chinese ~ sex + class + sex:class, data = df)# same as model2

summary(m3)## Df Sum Sq Mean Sq F value Pr(>F)

## sex 1 0.4 0.379 0.021 0.886

## class 3 35.6 11.869 0.643 0.589

## sex:class 3 14.5 4.838 0.262 0.853

## Residuals 92 1698.5 18.462TukeyHSD(m1)## Tukey multiple comparisons of means

## 95% family-wise confidence level

##

## Fit: aov(formula = chinese ~ sex + class, data = df)

##

## $sex

## diff lwr upr p adj

## m-f -0.123184 -1.810542 1.564174 0.8850721

##

## $class

## diff lwr upr p adj

## 2-1 0.783413646 -2.357430 3.924257 0.9145077

## 3-1 -0.006687175 -3.147530 3.134156 0.9999999

## 4-1 1.411925967 -1.728917 4.552769 0.6436723

## 3-2 -0.790100821 -3.930944 2.350742 0.9125370

## 4-2 0.628512320 -2.512331 3.769356 0.9532751

## 4-3 1.418613142 -1.722230 4.559456 0.6401878TukeyHSD(m2)## Tukey multiple comparisons of means

## 95% family-wise confidence level

##

## Fit: aov(formula = chinese ~ sex * class, data = df)

##

## $sex

## diff lwr upr p adj

## m-f -0.123184 -1.831271 1.584903 0.8864196

##

## $class

## diff lwr upr p adj

## 2-1 0.783413646 -2.396527 3.963355 0.9171646

## 3-1 -0.006687175 -3.186628 3.173254 0.9999999

## 4-1 1.411925967 -1.768015 4.591867 0.6523152

## 3-2 -0.790100821 -3.970042 2.389840 0.9152503

## 4-2 0.628512320 -2.551429 3.808453 0.9547830

## 4-3 1.418613142 -1.761328 4.598554 0.6488846

##

## $`sex:class`

## diff lwr upr p adj

## m:1-f:1 -0.51008912 -5.879907 4.859729 0.9999898

## f:2-f:1 -0.01509347 -5.533219 5.503032 1.0000000

## m:2-f:1 0.90883730 -4.043832 5.861506 0.9991490

## f:3-f:1 0.34492104 -4.788373 5.478215 0.9999991

## m:3-f:1 -0.86544310 -6.108469 4.377582 0.9995748

## f:4-f:1 1.25085045 -4.118968 6.620669 0.9961086

## m:4-f:1 1.11130442 -3.926032 6.148640 0.9972307

## f:2-m:1 0.49499565 -5.328225 6.318216 0.9999953

## m:2-m:1 1.41892641 -3.871548 6.709401 0.9908015

## f:3-m:1 0.85501016 -4.604926 6.314946 0.9997000

## m:3-m:1 -0.35535399 -5.918582 5.207874 0.9999993

## f:4-m:1 1.76093957 -3.921942 7.443821 0.9786628

## m:4-m:1 1.62139354 -3.748425 6.991212 0.9815885

## m:2-f:2 0.92393077 -4.517014 6.364876 0.9994876

## f:3-f:2 0.36001451 -5.245845 5.965874 0.9999993

## m:3-f:2 -0.85034963 -6.556861 4.856161 0.9997844

## f:4-f:2 1.26594392 -4.557277 7.089165 0.9974757

## m:4-f:2 1.12639789 -4.391727 6.644523 0.9983082

## f:3-m:2 -0.56391625 -5.614152 4.486319 0.9999692

## m:3-m:2 -1.77428040 -6.936014 3.387453 0.9621391

## f:4-m:2 0.34201315 -4.948462 5.632488 0.9999993

## m:4-m:2 0.20246712 -4.750202 5.155136 1.0000000

## m:3-f:3 -1.21036415 -6.545649 4.124921 0.9967004

## f:4-f:3 0.90592941 -4.554006 6.365865 0.9995601

## m:4-f:3 0.76638337 -4.366911 5.899677 0.9997816

## f:4-m:3 2.11629355 -3.446935 7.679522 0.9356674

## m:4-m:3 1.97674752 -3.266278 7.219773 0.9385226

## m:4-f:4 -0.13954603 -5.509364 5.230272 1.00000006.3 Two categorical variables

6.3.1 Chisquare t-test

When our data involves two categorical variables, and we want to know if these two variables are related or independent to each other, we can use the chisquare test.

The null hypothesis of the independence assumption is to be rejected if the p-value of the following Chi-squared test statistics is less than a given significance level α.

Here is an example: In the dataset survey, the Smoke column records the students smoking habit, while the Exer column records their exercise level. The allowed values in Smoke are “Heavy”, “Regul” (regularly), “Occas” (occasionally) and “Never”. As for Exer, they are “Freq” (frequently), “Some” and “None”.

tbl = table(survey$Smoke, survey$Exer)

chisq.test(tbl) ## Warning in chisq.test(tbl): Chi-squared approximation may be incorrect##

## Pearson's Chi-squared test

##

## data: tbl

## X-squared = 5.4885, df = 6, p-value = 0.4828As the p-value 0.4828 is greater than the .05 significance level, we do not reject the null hypothesis that the smoking habit is independent of the exercise level of the students.